1 token per second... Terrific!

I love things built in tiny form factors. I am a previous user of two iPhone minis, current user of the iPad mini, and now a loyal fan of Ricoh's GR lineup. As a common characteristic of all these products, they all have small footprints but simultaneously being less competent compared to their "regular sized" peers: the iPhone minis have "UPS" level battery life while iPad minis still embraced the jelly-liked LCD display. Ricoh's GR is a bit special but still cannot be compared to those full-frame cameras. Among all my collections, there is one special category called "single board computer" (SBC) which I am fond of. Just as implied in their name, they are computers in credit card sizes. Yet they are no exception to the general rule I mentioned above: They are extremely slow compared to any regular compute. When I say slow, I really mean it. Wanna open browser? Click the icon and you can go and grab a cup of coffee as it will took "forever". Unlike other computer which offers you "open-box" experiences, all SBCs demand some level of "tweaking" which stipulates secretion of dopamine and gives you a sense of achievement. In other words, the more time you spend in actualizing a function that other computers can do in an instant, the more "joy" you will gain (assuming you can actually do it).

Jetson, You Nasty Boy

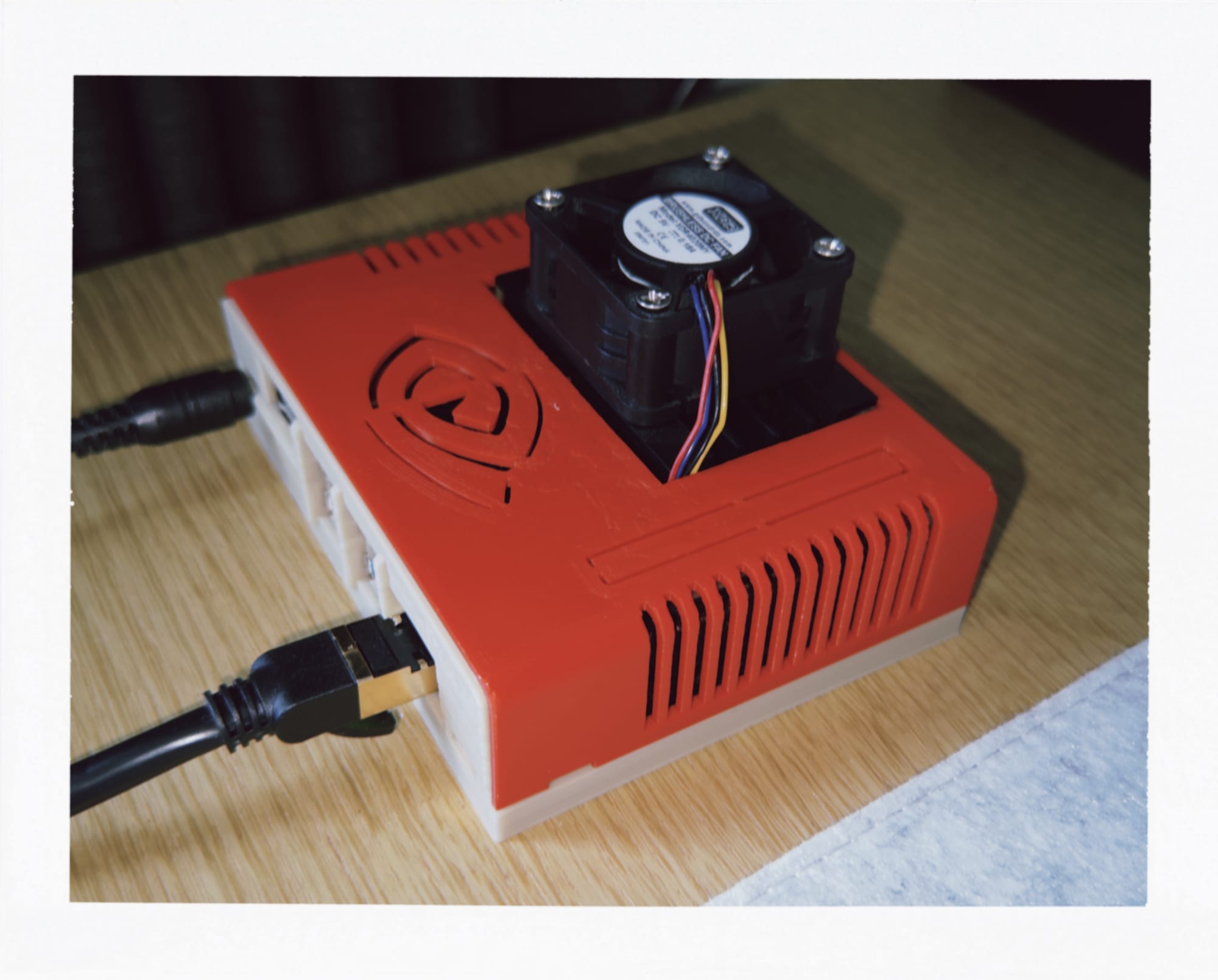

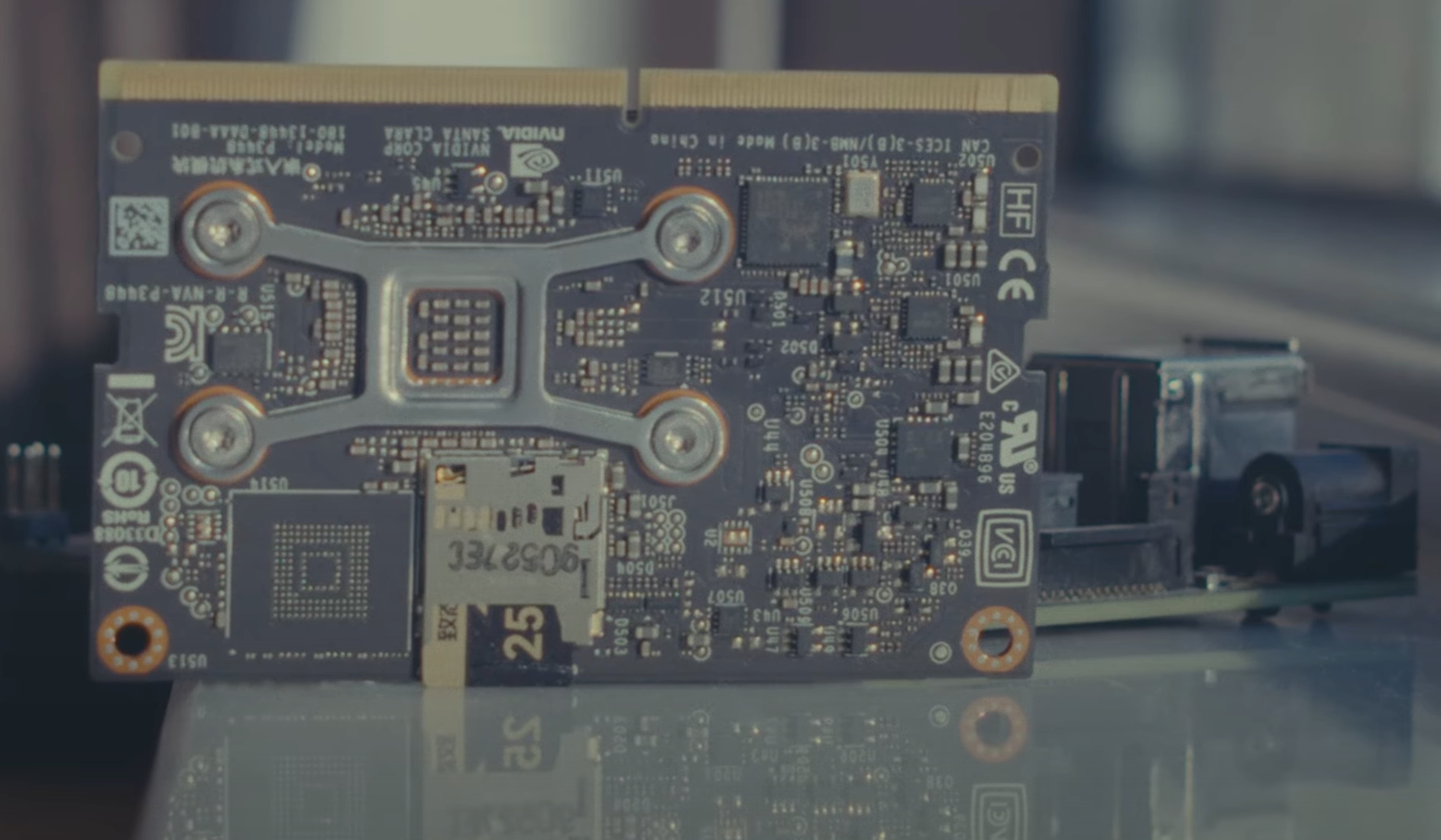

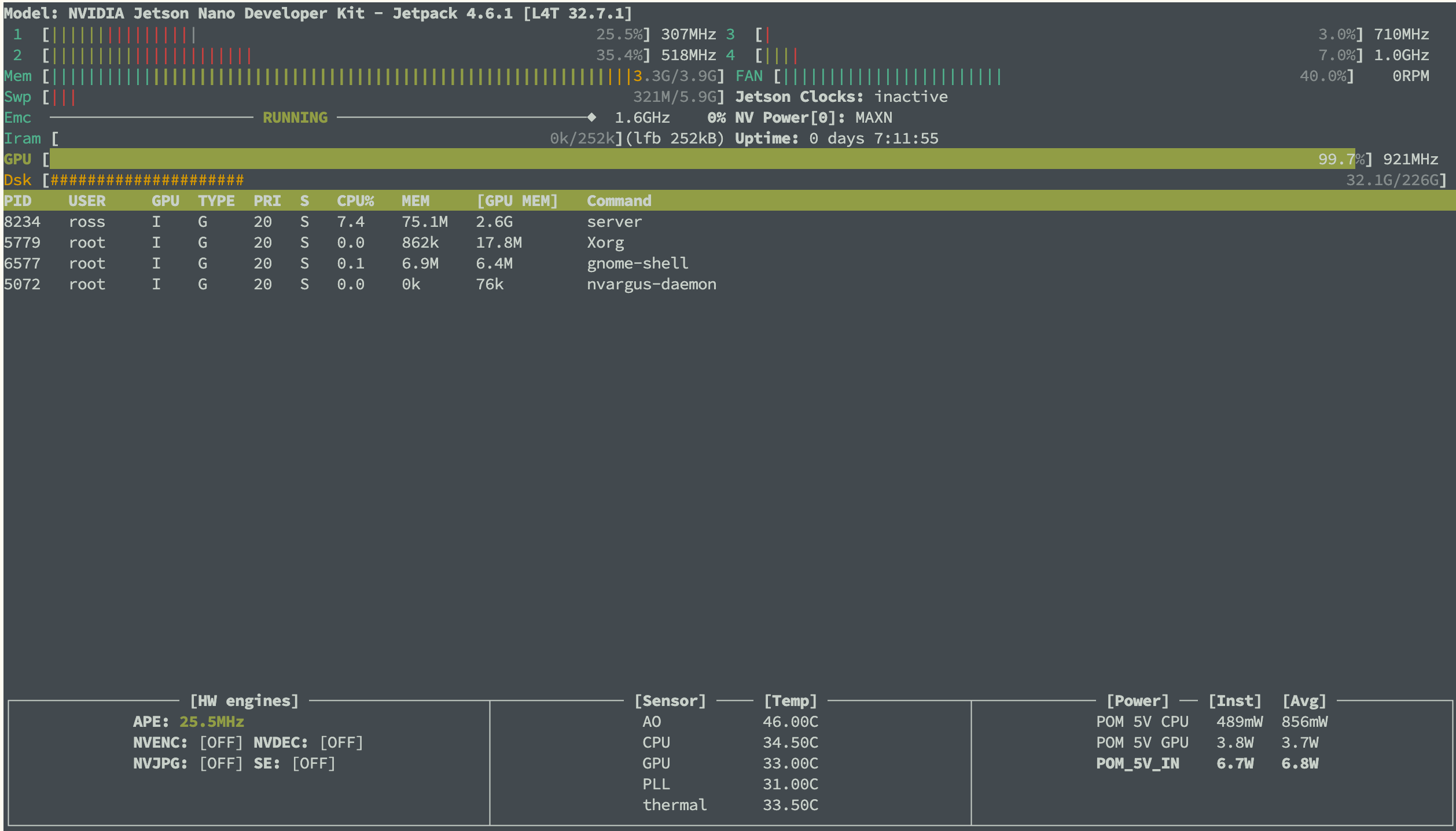

Right after realizing my Pi 400 may not suits well for ML related "developing", I bought the Jetson Nano from a guy in a fair price. Why Jetson? Maybe because I saw the exact model in the university lab. However, I soon realized how obsolete the board is after I power on the machine (actually you could already tell before power on the machine as it still a micro-usb interface). Apart from the same old laggy feeling from interact with most GUI, the compiling process on this board literally took days. For instances, I tried to compile the opencv with CUDA support, which is essential for any later computer vision applications. The process started at 7PM and it was not finished until the next morning. To give you a rough idea how slow the quad core armv8 cpu is, I ran sysbench on both Jetson and my m4 Mac mini.

On jetson

ross@jetson:~$ sysbench cpu --threads=1 run

sysbench 1.0.11 (using system LuaJIT 2.1.0-beta3)

Running the test with following options:

Number of threads: 1

Initializing random number generator from current time

Prime numbers limit: 10000

Initializing worker threads...

Threads started!

CPU speed:

events per second: 1200.75

General statistics:

total time: 10.0003s

total number of events: 12013

Latency (ms):

min: 0.82

avg: 0.83

max: 0.90

95th percentile: 0.84

sum: 9990.90

Threads fairness:

events (avg/stddev): 12013.0000/0.00

execution time (avg/stddev): 9.9909/0.00

On my Mac Mini

(base) ross@Rosss-M4-Mac-mini ~ % sysbench cpu --threads=1 run

sysbench 1.0.20 (using system LuaJIT 2.1.1727870382)

Running the test with following options:

Number of threads: 1

Initializing random number generator from current time

Prime numbers limit: 10000

Initializing worker threads...

Threads started!

CPU speed:

events per second: 17696878.01

General statistics:

total time: 10.0000s

total number of events: 176975381

Latency (ms):

min: 0.00

avg: 0.00

max: 0.03

95th percentile: 0.00

sum: 5518.33

Threads fairness:

events (avg/stddev): 176975381.0000/0.00

execution time (avg/stddev): 5.5183/0.00

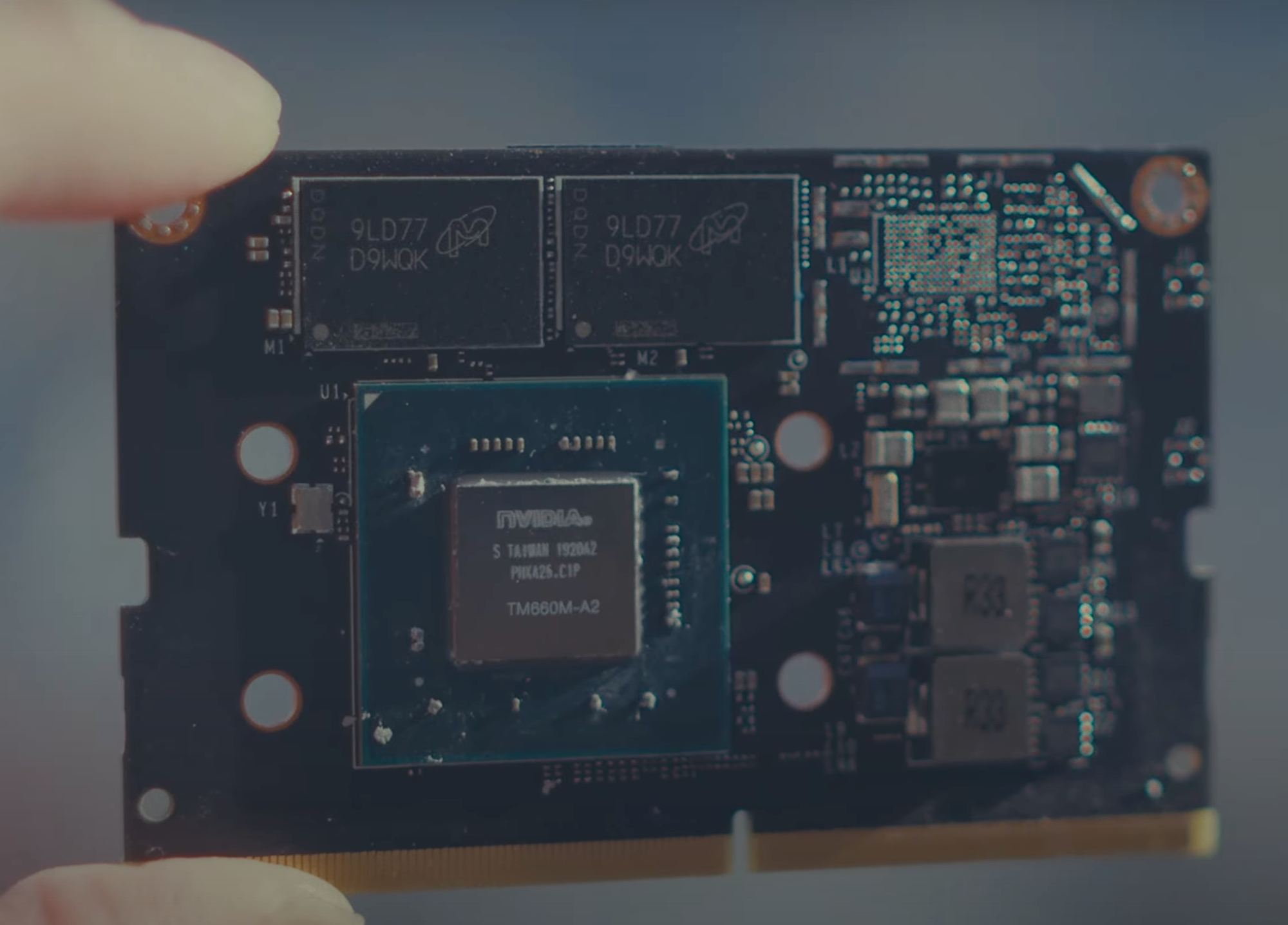

Did I mention CUDA? Ah indeed the board is one and only as it is a product of NVIDIA with "genuine" GPU. However, NVIDIA is not a company which famous for providing continuous supports. By the time I got the board, it was long out of official supports. There was no public driver also. Which means that you basically stuck at the last build.

ross@jetson:~$ sudo neofetch

.-/+oossssoo+/-. root@jetson

`:+ssssssssssssssssss+:` -----------

-+ssssssssssssssssssyyssss+- OS: Ubuntu 18.04.6 LTS aarch64

.ossssssssssssssssssdMMMNysssso. Host: NVIDIA Jetson Nano Developer Kit

/ssssssssssshdmmNNmmyNMMMMhssssss/ Kernel: 4.9.253-tegra

+ssssssssshmydMMMMMMMNddddyssssssss+ Uptime: 3 hours, 32 mins

/sssssssshNMMMyhhyyyyhmNMMMNhssssssss/ Packages: 2383

.ssssssssdMMMNhsssssssssshNMMMdssssssss. Shell: bash 4.4.20

+sssshhhyNMMNyssssssssssssyNMMMysssssss+ Theme: Adwaita [GTK3]

ossyNMMMNyMMhsssssssssssssshmmmhssssssso Icons: Adwaita [GTK3]

ossyNMMMNyMMhsssssssssssssshmmmhssssssso CPU: (4) @ 1.479GHz

+sssshhhyNMMNyssssssssssssyNMMMysssssss+ Memory: 853MiB / 3964MiB

.ssssssssdMMMNhsssssssssshNMMMdssssssss.

/sssssssshNMMMyhhyyyyhdNMMMNhssssssss/

+sssssssssdmydMMMMMMMMddddyssssssss+

/ssssssssssshdmNNNNmyNMMMMhssssss/

.ossssssssssssssssssdMMMNysssso.

-+sssssssssssssssssyyyssss+-

`:+ssssssssssssssssss+:`

.-/+oossssoo+/-.

As for its most attractive feature: CUDA acceleration. Well, let's say they are not doing the fake advertising. You do have cuda toolkit built-in the system image. But also an ancient one without any practical usage.

ross@jetson:~$ nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2021 NVIDIA Corporation

Built on Sun_Feb_28_22:34:44_PST_2021

Cuda compilation tools, release 10.2, V10.2.300

Build cuda_10.2_r440.TC440_70.29663091_0

PyTorch? Aha, there is PyTorch with CUDA support. But also came with caviar. Nvidia's official repo does not provide you with any information for this old timer but you can find some traits from the developer forum and install the 1.1 version.

### install the dependencies

sudo apt-get install -y libopenmpi-dev

### fetch the latest wheel

wget https://nvidia.box.com/shared/static/fjtbno0vpo676a25cgvuqc1wty0fkkg6.whl

mv fjtbno0vpo676a25cgvuqc1wty0fkkg6.whl torch-1.10.0-cp36-cp36m-linux_aarch64.whl

### install

python3 -m pip install torch-1.10.0-cp36-cp36m-linux_aarch64.whl

### Add install to bash

nano ~/.bashrc

export PATH=$PATH:/home/ross/.local/bin

source ~/.bashrc

### verify install

python3 -c "import torch; print(torch.__version__)"

1.10.0

With all the catches sum up together, I put it back to the case and have not been using it for a long time.

Jetson, Long Time No See

Coincidentally, I picked it up again. With recent butter smooth experiences of deploying LLM using llama.cpp on latest Jetson Orin Nano Super (much more advanced) and boosted eager, I decided to give it a try and stress the tiny chip out.

Under normal circumstances, build llama.cpp was quite easy. You simply clone the repo and then using CMake to build it up. However, for this Jetson, it's a huge project. I found a GitHub repo that relevant to the case:

FlorSanders/JetsonNano2GB_LlamaCpp_SetupGuide.md

The post have demonstrated a successful build using gcc. For my Jetson model, the only thing you have to amend will be during final build:

Instead of define the ARCH to TX GPU

make LLAMA_CUBLAS=1 CUDA_DOCKER_ARCH=sm_62

Should use Nano ARCH

make LLAMA_CUBLAS=1 CUDA_DOCKER_ARCH=sm_53 -j 4

Even though I described it as something simple, the process will took you forever as the GCC build is time-consuming. So, never start a build project at night.

After the build, it's time to run some model. However, as the llama.cpp version has been obsolete for quite a long time. It does not support any modern model architecture like gemma3 or even llama3.2. This could be a problem as Jetson's tiny little 4GB RAM cannot hold much model weights and bias and both gemma3 and llama3.2 provides small "large language model" with acceptable performance. After countless run of test, I found Phi2 in Q4_K_M quantization can be loaded into the RAM and ran without problem.

ross@jetson:~/llama.cpp$ ./server -m phi-2.Q4_K_M.gguf -ngl 33

ggml_init_cublas: GGML_CUDA_FORCE_MMQ: no

ggml_init_cublas: CUDA_USE_TENSOR_CORES: yes

ggml_init_cublas: found 1 CUDA devices:

Device 0: NVIDIA Tegra X1, compute capability 5.3, VMM: no

{"build":2275,"commit":"a33e6a0d","function":"main","level":"INFO","line":2796,"msg":"build info","tid":"547893403664","timestamp":1751703044}

{"function":"main","level":"INFO","line":2803,"msg":"system info","n_threads":4,"n_threads_batch":-1,"system_info":"AVX = 0 | AVX_VNNI = 0 | AVX2 = 0 | AVX512 = 0 | AVX512_VBMI = 0 | AVX512_VNNI = 0 | FMA = 0 | NEON = 1 | ARM_FMA = 1 | F16C = 0 | FP16_VA = 0 | WASM_SIMD = 0 | BLAS = 1 | SSE3 = 0 | SSSE3 = 0 | VSX = 0 | MATMUL_INT8 = 0 | ","tid":"547893403664","timestamp":1751703044,"total_threads":4}

llama_model_loader: loaded meta data with 20 key-value pairs and 325 tensors from phi-2.Q4_K_M.gguf (version GGUF V3 (latest))

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = phi2

llama_model_loader: - kv 1: general.name str = Phi2

llama_model_loader: - kv 2: phi2.context_length u32 = 2048

llama_model_loader: - kv 3: phi2.embedding_length u32 = 2560

llama_model_loader: - kv 4: phi2.feed_forward_length u32 = 10240

llama_model_loader: - kv 5: phi2.block_count u32 = 32

llama_model_loader: - kv 6: phi2.attention.head_count u32 = 32

llama_model_loader: - kv 7: phi2.attention.head_count_kv u32 = 32

llama_model_loader: - kv 8: phi2.attention.layer_norm_epsilon f32 = 0.000010

llama_model_loader: - kv 9: phi2.rope.dimension_count u32 = 32

llama_model_loader: - kv 10: general.file_type u32 = 15

llama_model_loader: - kv 11: tokenizer.ggml.add_bos_token bool = false

llama_model_loader: - kv 12: tokenizer.ggml.model str = gpt2

llama_model_loader: - kv 13: tokenizer.ggml.tokens arr[str,51200] = ["!", "\"", "#", "$", "%", "&", "'", ...

llama_model_loader: - kv 14: tokenizer.ggml.token_type arr[i32,51200] = [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, ...

llama_model_loader: - kv 15: tokenizer.ggml.merges arr[str,50000] = ["Ġ t", "Ġ a", "h e", "i n", "r e",...

llama_model_loader: - kv 16: tokenizer.ggml.bos_token_id u32 = 50256

llama_model_loader: - kv 17: tokenizer.ggml.eos_token_id u32 = 50256

llama_model_loader: - kv 18: tokenizer.ggml.unknown_token_id u32 = 50256

llama_model_loader: - kv 19: general.quantization_version u32 = 2

llama_model_loader: - type f32: 195 tensors

llama_model_loader: - type q4_K: 81 tensors

llama_model_loader: - type q5_K: 32 tensors

llama_model_loader: - type q6_K: 17 tensors

llm_load_vocab: mismatch in special tokens definition ( 910/51200 vs 944/51200 ).

llm_load_print_meta: format = GGUF V3 (latest)

llm_load_print_meta: arch = phi2

llm_load_print_meta: vocab type = BPE

llm_load_print_meta: n_vocab = 51200

llm_load_print_meta: n_merges = 50000

llm_load_print_meta: n_ctx_train = 2048

llm_load_print_meta: n_embd = 2560

llm_load_print_meta: n_head = 32

llm_load_print_meta: n_head_kv = 32

llm_load_print_meta: n_layer = 32

llm_load_print_meta: n_rot = 32

llm_load_print_meta: n_embd_head_k = 80

llm_load_print_meta: n_embd_head_v = 80

llm_load_print_meta: n_gqa = 1

llm_load_print_meta: n_embd_k_gqa = 2560

llm_load_print_meta: n_embd_v_gqa = 2560

llm_load_print_meta: f_norm_eps = 1.0e-05

llm_load_print_meta: f_norm_rms_eps = 0.0e+00

llm_load_print_meta: f_clamp_kqv = 0.0e+00

llm_load_print_meta: f_max_alibi_bias = 0.0e+00

llm_load_print_meta: n_ff = 10240

llm_load_print_meta: n_expert = 0

llm_load_print_meta: n_expert_used = 0

llm_load_print_meta: pooling type = 0

llm_load_print_meta: rope type = 2

llm_load_print_meta: rope scaling = linear

llm_load_print_meta: freq_base_train = 10000.0

llm_load_print_meta: freq_scale_train = 1

llm_load_print_meta: n_yarn_orig_ctx = 2048

llm_load_print_meta: rope_finetuned = unknown

llm_load_print_meta: model type = 3B

llm_load_print_meta: model ftype = Q4_K - Medium

llm_load_print_meta: model params = 2.78 B

llm_load_print_meta: model size = 1.66 GiB (5.14 BPW)

llm_load_print_meta: general.name = Phi2

llm_load_print_meta: BOS token = 50256 '<|endoftext|>'

llm_load_print_meta: EOS token = 50256 '<|endoftext|>'

llm_load_print_meta: UNK token = 50256 '<|endoftext|>'

llm_load_print_meta: LF token = 128 'Ä'

llm_load_tensors: ggml ctx size = 0.25 MiB

llm_load_tensors: offloading 32 repeating layers to GPU

llm_load_tensors: offloading non-repeating layers to GPU

llm_load_tensors: offloaded 33/33 layers to GPU

llm_load_tensors: CPU buffer size = 70.31 MiB

llm_load_tensors: CUDA0 buffer size = 1634.32 MiB

....................................................................................

llama_new_context_with_model: n_ctx = 512

llama_new_context_with_model: freq_base = 10000.0

llama_new_context_with_model: freq_scale = 1

llama_kv_cache_init: CUDA0 KV buffer size = 160.00 MiB

llama_new_context_with_model: KV self size = 160.00 MiB, K (f16): 80.00 MiB, V (f16): 80.00 MiB

llama_new_context_with_model: CUDA_Host input buffer size = 7.01 MiB

llama_new_context_with_model: CUDA0 compute buffer size = 110.00 MiB

llama_new_context_with_model: CUDA_Host compute buffer size = 5.00 MiB

llama_new_context_with_model: graph splits (measure): 2

{"function":"initialize","level":"INFO","line":487,"msg":"initializing slots","n_slots":1,"tid":"547893403664","timestamp":1751703081}

{"function":"initialize","level":"INFO","line":499,"msg":"new slot","n_ctx_slot":512,"slot_id":0,"tid":"547893403664","timestamp":1751703081}

{"function":"main","level":"INFO","line":3039,"msg":"model loaded","tid":"547893403664","timestamp":1751703081}

{"function":"main","hostname":"127.0.0.1","level":"INFO","line":3498,"msg":"HTTP server listening","port":"8080","tid":"547893403664","timestamp":1751703081}

{"function":"update_slots","level":"INFO","line":1605,"msg":"all slots are idle and system prompt is empty, clear the KV cache","tid":"547893403664","timestamp":1751703081}

The model was successfully loaded into "VRAM" and GPU was working hard to generate the tokens. As we serve the model in standard open-ai chat format. We can compose a script to utilize the API.

#!/bin/bash

SERVER_URL="http://localhost:8080/completion"

MODEL="Phi-2"

HISTORY=""

echo "🧠 Welcome to $MODEL (plain chat mode)"

echo "Type 'exit' or Ctrl+C to quit."

echo

while true; do

read -p "You: " USER_INPUT

if [[ "$USER_INPUT" == "exit" ]]; then

echo "👋 Bye!"

break

fi

# Build the full conversation prompt

PROMPT="${HISTORY}User: ${USER_INPUT}\nAssistant:"

JSON=$(jq -n \

--arg prompt "$PROMPT" \

--argjson n_predict 128 \

'{prompt: $prompt, n_predict: $n_predict}')

RESPONSE=$(curl -s -X POST -H "Content-Type: application/json" \

-d "$JSON" "$SERVER_URL")

OUTPUT=$(echo "$RESPONSE" | jq -r '.content // .response // .completion // "⚠️ Invalid response"')

echo -e "🧠 $MODEL: $OUTPUT"

echo

# Append current turn to history

HISTORY+="User: ${USER_INPUT}\nAssistant: ${OUTPUT}\n"

done

The model could be useful to some extent (could be more useful than most RPA).

ross@jetson:~$ ./phi_chat.sh

🧠 Welcome to Phi-2 (plain chat mode)

Type 'exit' or Ctrl+C to quit.

You: Explain overfitting

🧠 Phi-2: Overfitting is a problem that occurs when a machine learning model learns too much from the training data and fails to generalize well to new or unseen data. This means that the model has memorized the noise, outliers, or irrelevant details of the training data, rather than capturing the true underlying patterns or relationships. As a result, the overfitted model performs poorly on test or validation data, as it cannot distinguish between the relevant and irrelevant features. Overfitting can reduce the accuracy, robustness, and interpretability of the machine learning model, and may lead to unrealistic or biased predictions.

You: What about the opposite of overfitting

🧠 Phi-2: The opposite of overfitting is underfitting. Underfitting occurs when a machine learning model is too simple or complex for the given data and does not capture the important features or patterns. This means that the model fails to learn enough from the training data and cannot generalize well to new or unseen data. As a result, the underfitted model performs poorly on both test and validation data, as it cannot represent the true behavior of the problem. Underfitting can reduce the accuracy, robustness, and interpretability of the machine learning model, and may lead to inaccurate or noisy predictions.

I am glad you are not curious about the inference speed. What, you are curious about the speed. Okay, prepare yourself for the world slowest GPU-accelerated eval rate.

{"function":"print_timings","level":"INFO","line":319,"msg":"prompt eval time = 9034.79 ms / 143 tokens ( 63.18 ms per token, 15.83 tokens per second)","n_tokens_second":15.827699004537347,"num_prompt_tokens_processed":143,"slot_id":0,"t_prompt_processing":9034.794,"t_token":63.18037762237762,"task_id":122,"tid":"547893403664","timestamp":1751703487}

{"function":"print_timings","level":"INFO","line":333,"msg":"generation eval time = 71475.94 ms / 118 runs ( 605.73 ms per token, 1.65 tokens per second)","n_decoded":118,"n_tokens_second":1.6509050933276235,"slot_id":0,"t_token":605.7283389830509,"t_token_generation":71475.944,"task_id":122,"tid":"547893403664","timestamp":1751703487}

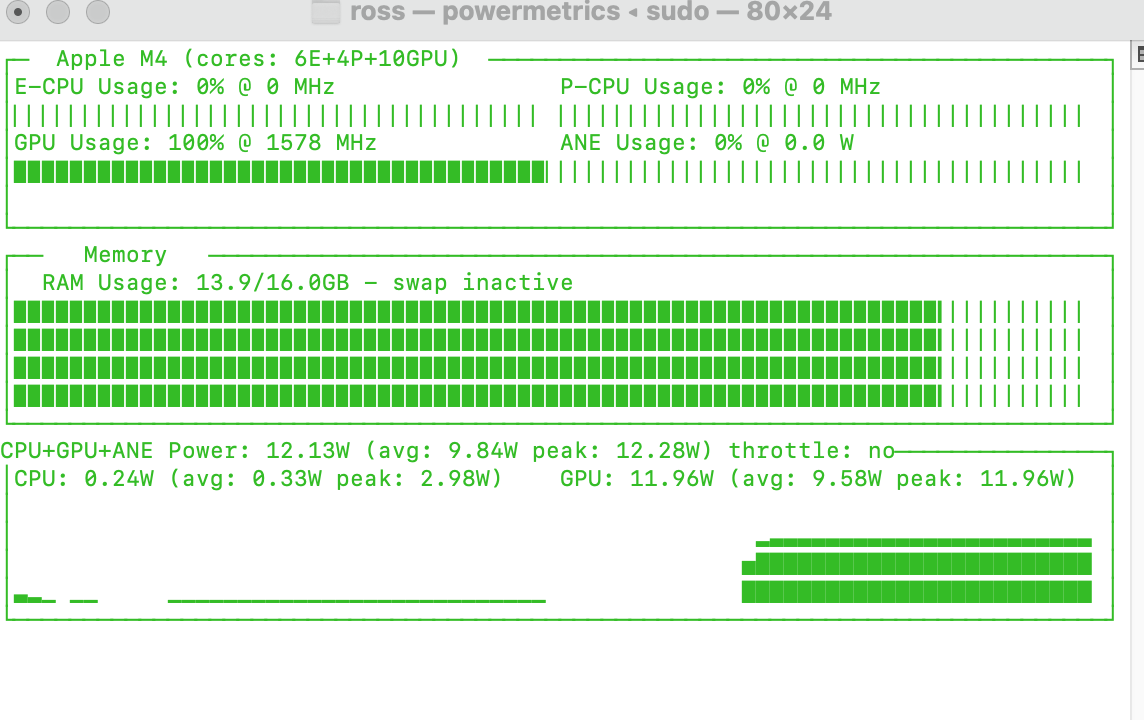

1.65 tokens per second. A world record! You may justify the speed for the low power consumption. But for your information, the Mac mini running a much larger model can do 15.57 tokens per second at 12w.

prompt eval time = 222.83 ms / 11 tokens ( 20.26 ms per token, 49.36 tokens per second)

eval time = 32042.65 ms / 499 tokens ( 64.21 ms per token, 15.57 tokens per second)

total time = 32265.49 ms / 510 tokens

TL DR

Buy a Mac mini.